Generating a 3D human model from a single reference image is challenging because it requires inferring textures and geometries in invisible views while maintaining consistency with the reference image. Previous methods utilizing 3D generative models are limited by the availability of 3D training data. Optimization-based methods that lift text-to-image diffusion models to 3D generation often fail to preserve the texture details of the reference image, resulting in inconsistent appearances in different views. In this paper, we propose HumanRef, a 3D human generation framework from a single-view input. To ensure the generated 3D model is photorealistic and consistent with the input image, HumanRef introduces a novel method called reference-guided score distillation sampling (Ref-SDS), which effectively incorporates image guidance into the generation process. Furthermore, we introduce region-aware attention to Ref-SDS, ensuring accurate correspondence between different body regions. Experimental results demonstrate that HumanRef outperforms state-of-the-art methods in generating 3D clothed humans with fine geometry, photorealistic textures, and view-consistent appearances. We will make our code and model available upon acceptance.

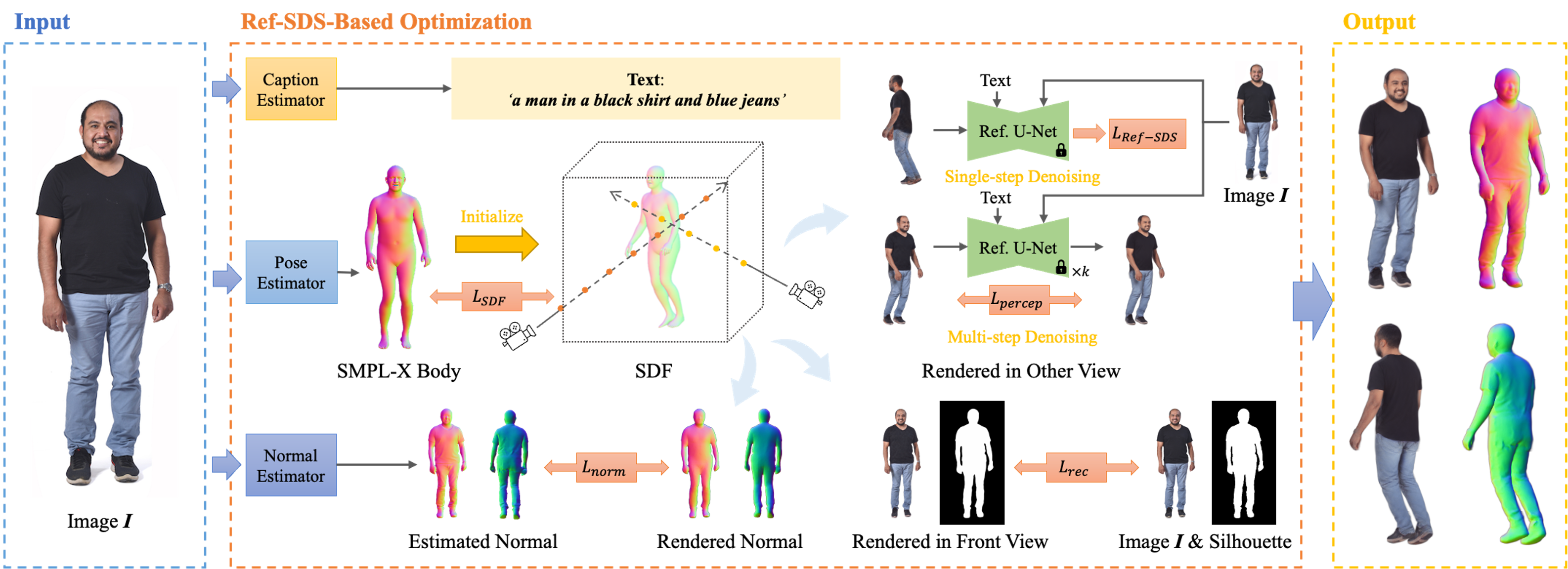

Overview of our proposed HumanRef for 3D clothed human generation from a single input image. Given an input image, we initially extract its text caption, SMPL-X body, front and back normal maps, and silhouette using estimators. A neural SDF network, initialized with the estimated SMPL-X body, is then employed for optimization-based generation. To maintain appearance and pose consistency, we use the input image, silhouette, and normal maps as optimization constraints. For invisible regions, we introduce Ref-SDS, a method that distills realistic textures from a pretrained diffusion model, yielding sharp, realistic 3D clothed humans that align with the input image.

@article{zhang2023humanref,

title={HumanRef: Single Image to 3D Human Generation via Reference-Guided Diffusion},

author={Zhang, Jingbo and Li, Xiaoyu and Zhang, Qi and Cao, Yanpei and Shan, Ying and Liao, Jing},

journal={arXiv preprint arXiv:2311.16961},

year={2023}

}